Introduction

In the ever-evolving world of artificial intelligence, buzzwords often come and go. One term that’s been making waves recently is Model Context Protocol (MCP). MCP have announced about MCP late 2024, while headlines sometimes endorse MCP as a revolutionary breakthrough, it’s important to look past the hype and understand what it truly means for AI—and for us. Let’s take a closer look at the concepts, benefits, challenges, and implications of MCP.

The Current Landscape of Large Language Models

Large Language Models (LLMs) have impressed us with their ability to understand and generate human-like text. However, there’s a catch. Despite their remarkable abilities in handling language, LLMs are inherently limited. They excel at predicting the next word or answering queries based on vast training data, yet they lack the intrinsic capability to take meaningful actions in the real world. For example, if a user asks for a weather forecast, the LLM can only rely on the data it was trained on, which is typically weeks old by the time the model is published. As a result, it lacks real-time information and is only useful for answering general questions about past weather trends.

To bridge this gap, early solutions attempted to connect LLMs to external tools and APIs. This allowed them to perform tasks like searching the internet or interacting with databases. Unfortunately, this approach introduced a new set of challenges.

At the start of 2024, ChatGPT unveiled its own ChatGPT Store as a hub to integrate AI-driven services, yet it ultimately failed to capture the expected market traction.

Why It Didn’t Succeed: Despite its promising concept, the store struggled with several issues that hindered its success. First, the user interface and discoverability were subpar, making it difficult for users to find high-quality GPT applications amid a sea of mediocre offerings. Second, an unclear monetization strategy left developers and users without compelling financial incentives or clear value propositions. Finally, market saturation—with numerous alternative solutions already available—meant the store couldn’t differentiate itself effectively in an increasingly competitive space.

Each external tool came with its own API language and quirks, making integration a complicated and sometimes frustrating process—imagine trying to build a system where every component speaks a different dialect.

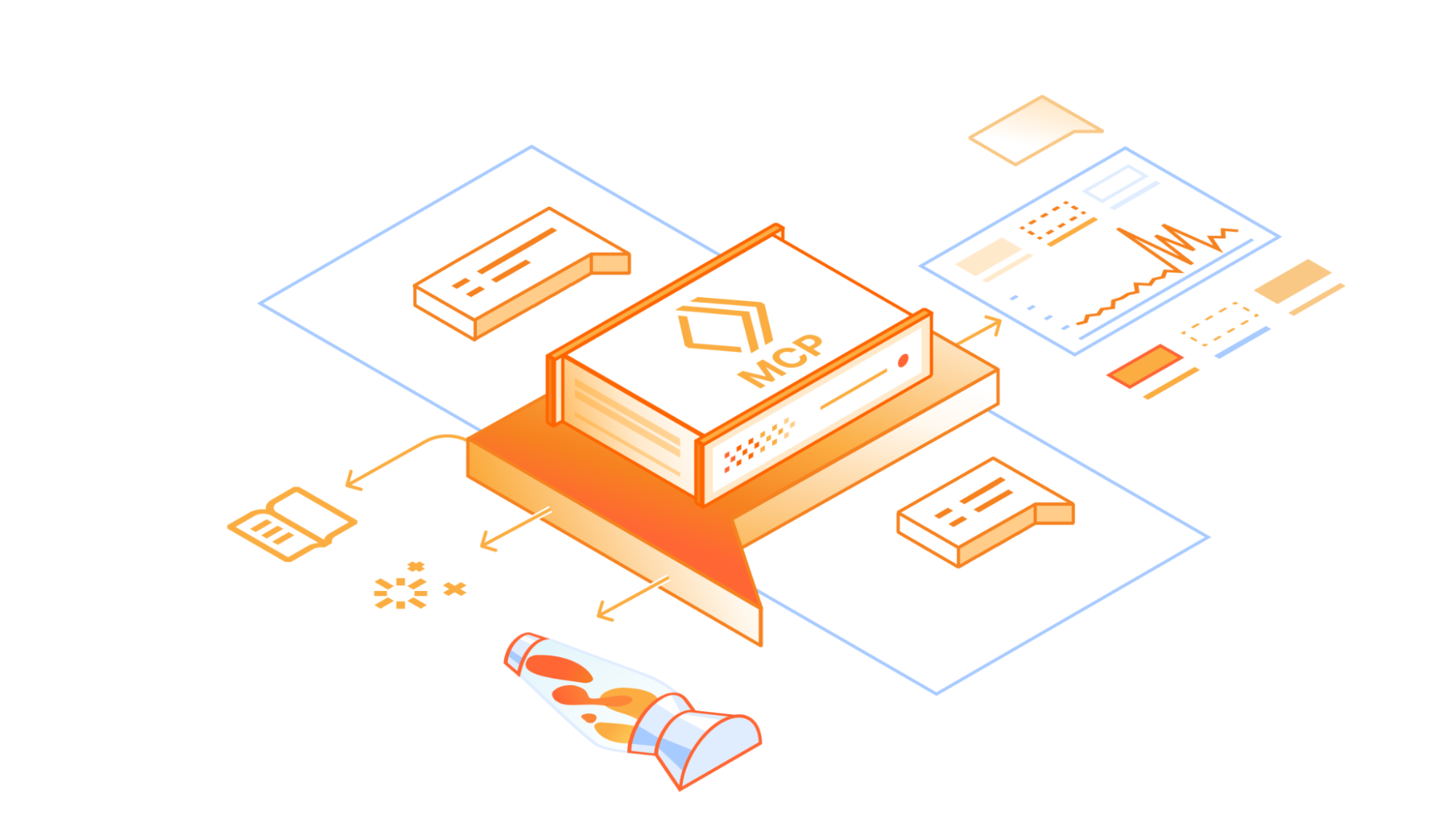

Enter Model Context Protocol (MCP)

MCP aims to solve these integration headaches by providing a standardized layer of communication between LLMs and external services. Think of MCP as a universal translator. Instead of forcing an LLM to learn the unique "languages" of countless APIs, MCP converts these varied communication styles into a consistent format that the LLM can easily understand.

How MCP Works

The MCP ecosystem is built on a few key components:

- MCP Client: This is the direct interface for LLMs, found in platforms like Tempo, Wind Surf, and Cursor.

- MCP Protocol: A set of rules and specifications that governs how the client and server communicate.

- MCP Server: Acting as the translator, this server takes the specific capabilities of an external service and presents them in an MCP-compliant format. Service providers typically manage this component.

External Service: Whether it’s a database, CRM, or another tool, these services supply the capabilities that the LLM needs.

A Step-by-Step Example of an MCP Workflow

To demonstrate how MCP enhances communication between AI and external services, let’s walk through a typical workflow:

- Initialization – The host AI application starts an MCP client.

- Connection – The client establishes a connection with an MCP server (e.g., one that provides weather data, like weather.py).

- Discovery – The client queries the server to identify available tools and capabilities.

- User Interaction – A user asks the AI a question.

- Invocation – The AI, via the MCP client, invokes a relevant tool (e.g., get_forecast).

- Data Fetching – The MCP server retrieves the requested weather data.

- Data Relay – The server sends the data back to the MCP client.

- Response Generation – The client delivers the data to the AI, which processes and presents it as a user-friendly response.

This step-by-step process shows how MCP creates a seamless bridge between AI and external services, simplifying what was once a complex integration landscape. Looking ahead, LLMs are now equipped to retrieve weather data directly from the weather MCP service, a modern twist on traditional API integration that establishes a new benchmark for AI applications.

Promises and Pitfalls: The MCP Landscape

While MCP is an exciting development, it’s still in its early stages and comes with both opportunities and challenges.

The Promising Aspects

- Simplified Integration: MCP significantly reduces the complexity of linking LLMs with various external services.

- Expanded Capabilities: With easier access to external resources, LLMs can potentially handle a broader range of tasks.

- Enhanced Cohesion: By providing a unified communication layer, MCP can lead to more reliable interactions between LLMs and the tools they use.

Let’s think about building an internal data querying tool can make a huge difference for a company, letting teams easily access and analyze sales data—so anyone can pull a sales report when they need it. It helps different teams work together better and speeds up decision-making.

But "With great power comes great responsibility", it comes to handling more complex use cases, there are some challenges that companies need to tackle.

The Challenges Ahead

Authentication Challenges

- Secure Onboarding: Integrating robust, secure authentication methods remains a work in progress. The current API onboarding process can be cumbersome and requires manual configurations, which can slow down the setup for new users.

- Standardization Roadmap: Plans are in place to incorporate standardized protocols (such as OAuth), but until these are fully integrated, inconsistencies in authentication may persist. This can lead to fragmented security experiences across different platforms.

- User Experience Impact: The added complexity in authentication might deter startups and developers from adopting the platform if the process remains overly complicated or time-consuming.

Identity Management

- Defining the Acting Entity: A significant challenge is determining whose identity is used during interactions—whether it’s the end user, the LLM itself, or the MCP layer.

- Potential OAuth Integration: Future support for OAuth could help standardize identity management by providing clear, delegated access controls.

- Consistency Across Services: Without a clear identity management strategy, different services might handle requests differently, potentially leading to security breaches and data integrity issues.

Vendor Dynamics

- Competing Standards: MCP originates from Anthropic, which raises questions about how it will align or compete with similar initiatives from other giants like OpenAI.

- Ecosystem Fragmentation: The presence of multiple competing platforms—such as OpenAI’s Agents SDK—could force developers to choose between standards, reducing interoperability and possibly hindering innovation.

- Strategic Partnerships: The success of MCP might depend on whether it can forge strategic alliances or differentiate itself effectively in the broader AI ecosystem.

Technical Hurdles

- Server Setup Complexity: Early adopters describe setting up MCP servers as “annoying” due to complex configurations and integration challenges. Smoothing out these friction points with better documentation or automated setup tools is critical for broader adoption.

- Scalability Concerns: As usage scales, ensuring that the infrastructure can handle increased loads without compromising performance or security will be essential.

- Integration with Legacy Systems: Aligning MCP with existing enterprise systems and legacy software often requires additional middleware, adding another layer of technical difficulty.

Hype vs. Reality

- Expectations Management: There is a risk that the current enthusiasm around MCP might outpace its practical utility. Startups and developers could become disillusioned if the platform fails to deliver measurable benefits.

- Proof of Concept: It's important for early success stories to be tempered with rigorous testing and validation, ensuring that the technology meets real-world needs before widespread adoption.

- Market Adoption: Overpromising and underdelivering could impact trust in the platform, making it crucial for vendors to manage expectations carefully.

Long-Lived Credentials

- Extended Validity Risks: Long-lived credentials, which do not expire or rotate frequently, pose a significant security risk. If compromised, they provide attackers with extended access to systems.

- Increased Exposure: The longer credentials remain active, the greater the risk of misuse. Implementing automated rotation and expiration can help mitigate these risks.

- Mitigation Strategies: Future systems should incorporate mechanisms for regular credential renewal to limit the exposure window.

Lack of Access Control Governing Secrets

- Unrestricted Secret Sharing: Currently, there is insufficient access control over sensitive information such as API keys and configuration data.

- Risk of Unauthorized Access:

- One significant challenge is that the MCP framework currently lacks a standardized method for authorization. While it's possible to implement custom authorization, doing so requires extensive mapping and translation of diverse credentials, tokens, and permissions from multiple external services. This complexity not only increases the likelihood of errors and security vulnerabilities but also makes it difficult to create a unified, robust system.

- The proposed OAuth 2.1 authorization flow outlined in the Model Context Protocol (MCP) specification focuses on managing authorization between the MCP client and the MCP server. However, this approach does not extend to controlling user access to specific contexts once they have access to the tool.

- In this flow, the MCP server acts as both an OAuth client (to the third-party authorization server) and an OAuth authorization server (to the MCP client). The process involves the MCP client initiating a standard OAuth flow with the MCP server, which then redirects the user to a third-party authorization server. After the user authorizes with the third-party server, the MCP server exchanges the authorization code for an access token and completes the OAuth flow with the MCP client.

- While this mechanism secures the communication between the MCP components and external services, it does not inherently provide fine-grained access controls within the MCP environment. Once a user gains access to a tool via the MCP client, there are no built-in safeguards to restrict or monitor their access to specific contexts or data within that tool. This limitation highlights the need for additional access control measures within the MCP framework to ensure that users can only interact with authorized contexts, thereby enhancing overall security and compliance.

- Imagine an MCP server tasked with interfacing with Slack to fetch or post messages. Slack requires secure API tokens to authenticate requests. Without a standardized authorization method within MCP, you might implement a custom solution that maps user identities and permissions to the appropriate Slack API token. However, if this custom logic is not thoroughly vetted or uniformly applied, it could lead to scenarios where the API token is accidentally exposed in logs or misrouted to an unauthorized component. An attacker, for instance, might intercept these tokens, gain unauthorized access to Slack channels, and potentially retrieve or manipulate sensitive messages.

No Audit Trail for Secret Usage

- Lack of Visibility: The absence of an audit trail makes it challenging to monitor who accessed sensitive secrets and when, hindering effective incident response.

- Accountability Issues: Without detailed logs, it is difficult to hold parties accountable for unauthorized access or misuse of secrets.

- Compliance and Forensics: Future enhancements should include comprehensive logging and auditing features to track secret usage, which is vital for compliance and forensic analysis in the event of a breach.

My opinion

Personally, I believe that MCP will remain a powerful tool for local development—especially when used with tools like Cursor IDE, which can leverage its capabilities in a controlled environment. However, integrating the full power of MCP into user-facing applications is still a long way off. The challenges outlined above, particularly around authentication, identity management, and secret handling, must be resolved before MCP can truly shine in end-user environments.

Looking Ahead: Implications and Next Steps

Despite the current challenges, MCP represents a significant step toward a more seamless integration between LLMs and the tools that empower them. For developers, understanding MCP is becoming increasingly important. Concepts like an “MCP App Store” hint at a future where discovering and deploying MCP-enabled services could be as easy as browsing an app marketplace.

Platforms like Composio offer a suite of Model Context Protocol (MCP) services, providing seamless integration with over 250 applications. These integrations encompass a wide array of tools, including Gmail, Slack, GitHub, and Google Workspace, all accessible through a unified API. Composio's managed servers come equipped with built-in authentication support, handling OAuth, API keys, and basic auth flows, thereby simplifying secure connections. This infrastructure enables AI agents and large language models (LLMs) to interact with external tools and services efficiently, streamlining workflows and enhancing productivity.

For businesses and tech enthusiasts alike, the key is to stay informed. While it might be premature to make major strategic decisions based solely on MCP’s promise, its potential to unlock a new era of intuitive, integrated AI applications is undeniable. A cautious but observant approach—keeping an eye on developments and understanding the principles behind MCP—will be vital as this technology evolves.

In summary, although MCP remains a work in progress, its core concept—a standardized communication layer connecting LLMs with external services—holds the promise of being truly transformative. As the technology matures and the current technical challenges are resolved, MCP could unlock the full potential of AI by enabling the development of more powerful, versatile, and user-friendly assistants.

Looking ahead, the future of MCP appears promising. By comprehensively addressing issues such as secure authentication, identity management, and secret handling, developers and organizations can build a more secure, scalable, and efficient ecosystem for AI-powered applications. However, it’s important to note that MCP is not yet fully ready for production ready -level deployment, and further refinement is necessary before it can be widely adopted in mission-critical environments.

Stay tuned as we continue to track the evolution of MCP and its impact on the future of AI.

Thanks for reading.